Machine Learning (ML) appears to have made great strides in many areas, including machine translation, autonomous vehicle control, image classification, enabling games on Xbox, PlayStation, Nintendo, and Steam. This has made Artificial Intelligence popular and securing the information in it is challenging.

Let’s take a look at an industry that many of us use. We will find out why security is essential to protect the community while building a platform using Machine Learning in the auto industry.

First Car

When Bertha Benz made the first drive visiting her mother in Germany, she was creating history. She became the first person to drive a car for a little more than 100 km. [1]

Although it looks like she was using her husband’s car to visit her mother, her motive was to market her husband’s invention to the world. That was probably the most effective way to socialize the product at that time. There was no digital media or social network. With an internal combustion engine, tubular steel frame, and three wheels, the only electrical connection was the high-voltage ignition with a spark plug.

Moving to a Platform Business

Today Benz cars are loaded with a Mercedes Benz User Experience (MBUX) Infotainment System and a learning-software that enables a customizable presentation. [2] The system offers an intelligent voice control system and a navigation system with augmented reality. The car comes with an ecosystem for apps and services to allow an in-car office. Having such an approach adds value to Benz customers while benefiting its shareholders, employees, suppliers, and society. The automobile company is now transitioning from a pure product company to a platform ecosystem. Why is this happening?

That’s because, if they don’t have a strategy for a platform world, they will have to begin planning their exit. [3] If we look at Global Brands of 2019, most of the successful ones are technology companies. [4]

Twenty of the first 32 of them are commodity-based pipeline businesses. They build their product for their consumers using materials provided by their suppliers. A good example is Coca Cola. They produce packaged beverages using raw materials such as water, coke, and other ingredients. Coca-Cola used to be at the top; but no more.

Of the list, 7 are traditional pipeline businesses that are slowly transforming into a platform business. Toyota, Daimler, and BMW are some of them. Not all of them are successful. An example is GE. To get it right, these companies need to have a business use case with the appropriate technology to support it and a community to use it.

Daimler saw the need for a platform business in 2015. Mercedes Benz’s head then said that it would not allow Daimler to be demoted to a dumb supplier’s role by simply producing cars for Google and Apple. [5] He saw it coming five years back, and their plans are working well.

Pipeline versus Platform Business

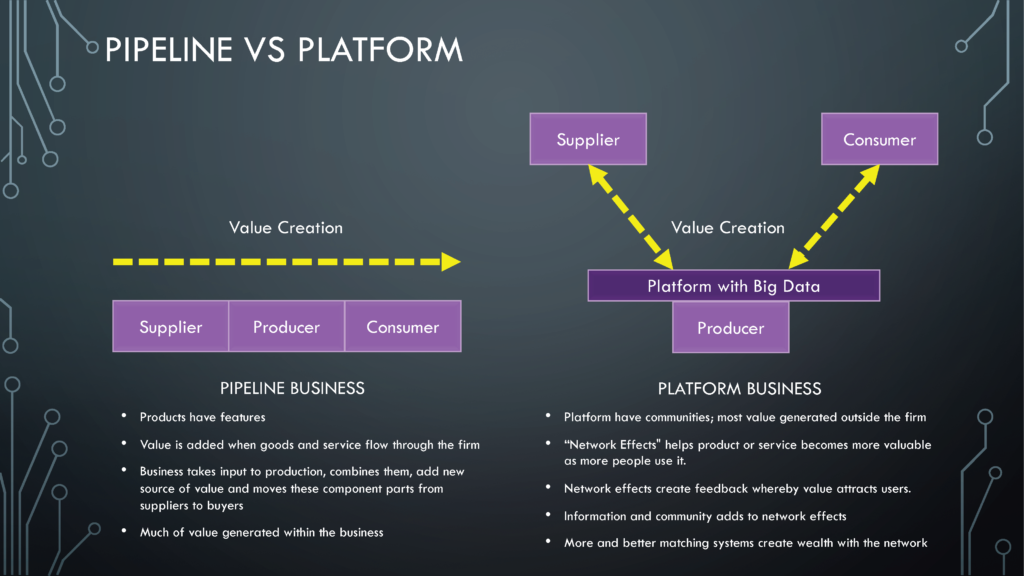

In the traditional pipeline business, products have features. The value in the product is generated when goods and services flow through the firm’s pipeline. [6]

The business takes the input to production, combines them, add new sources of value, and moves them from suppliers to buyers. Much of the value is generated within the business.

However, in a platform business, value is generated outside the company. The platform they build enables continuous interaction with suppliers and consumers to create a network effect. The effect it creates helps its product and services become more valuable as more people use it. The feedback it creates attracts more suppliers and users.

If Bertha wants to promote a car today, she probably would have a video with her and her dog enjoying a scenic drive around New England showcasing all the features the car has to offer. Or she could hire professional drivers to create a video imitating some of the Hollywood spy movie car chases. Bertha could then post the video on platforms like YouTube and Facebook. While promoting her video, she would also get feedback from the audience through likes, shares, and comments. Such feedback helps her to realign her strategy for the market and the products.

Whenever a stakeholder interacts with a platform, more and more data is generated. Such data, when added with the right context, build more information to power the platform. They add more value to the platform. To make the platform successful and create wealth, we need to have an excellent matching system. That’s where we need to have well-planned Machine Learning.

Platform Suppliers and Consumers

Some examples of platforms with suppliers and consumers include Android developers and users, Amazon sellers and buyers, Facebook posters and readers, or Upwork projects and freelancers.

In all these, production and consumption are moved off the platform. The business creates and curates the platform while the suppliers and consumers interact and generate value in it.

The trick for a successful platform is to have a proper Machine Learning system to match and support producers and consumers. Having low-quality participants on the platform would be an adverse selection problem. A crucial role is to push out low quality and bring in good quality to the platform.

Most of these successful platforms have a quality scoring mechanism or a reputation system to curate participation. Investing in such AI would reward high quality, visible, and profitable business.

Security Risk in Automotive Platforms

Though ML and AI have been around for quite some time, the need for secure machine learning systems started getting traction with some recent incidents. Before we go into them, let’s take a look at some of the security risk.

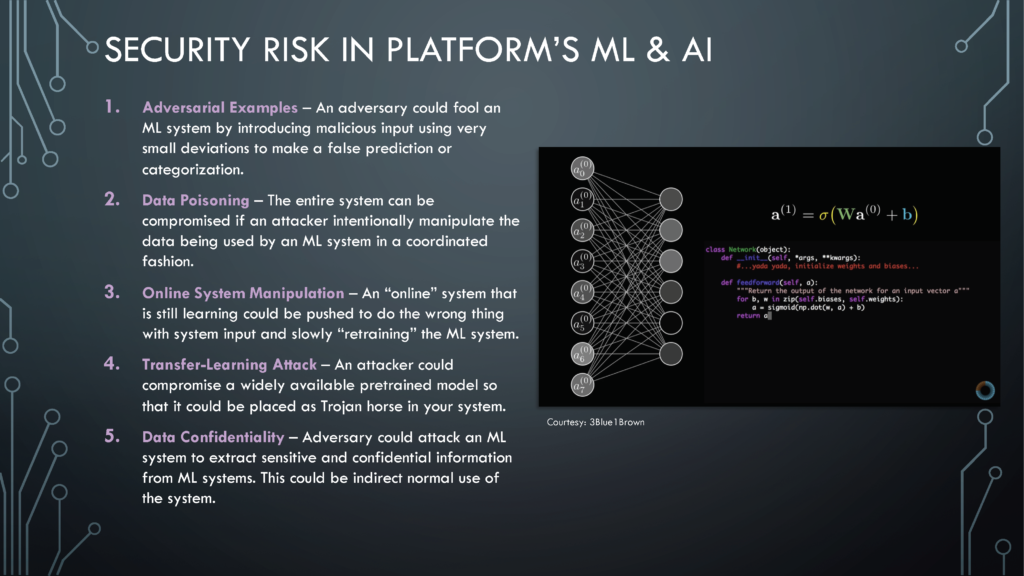

Adversarial examples

According to Gary McGraw, one of the most critical attacks that threaten machine learning systems is adversarial examples. [7] This attack aims to fool the machine learning model by feeding it malicious input in tiny nudges to cause the model to make false predictions or categorizations. Think about the neural network that we build! Any slight variation of input data could cause an adverse impact on the output. Adversarial examples need to be planned for in the machine learning design to avoid such mistakes.

Data Poisoning

Since data plays such a massive role in machine learning security, if an attacker can purposely manipulate the machine learning system’s data, it can compromise the entire system. Machine Learning engineers should assess the training data an attacker could control and to what extent they can control it. This could prevent data poisoning.

System Manipulation

When a machine learning system continues to learn and modifies its behavior while in operational use, it is said to be “online.” Experienced hackers can subtly move an online system in the wrong direction by feeding the system with the wrong inputs. Such inputs retrain the system to give misleading outputs. This type of attack is seamless to execute and subtle enough to be successful. To prevent it, machine learning engineers must consider algorithm choice, data provenance, and machine learning operations to adequately protect the system.

Transfer learning attack

When a machine learning system is built by fine-tuning a pre-trained model that is widely available, it is known as a transfer learning attack. The attacker could use a publicly available model as a cover for their malicious machine learning behavior. If we use a transfer model, the model should describe in detail exactly what it does and what the creator has put in place to control the risks in their models.

Data Confidentiality

Protecting confidential data is already tricky, regardless of whether it is part of the system or not. Machine learning brings additional challenges to protecting confidential data since sensitive information is built into the model through training. There are effective but subtle attacks to extract data from a machine learning system and potentially risky. To protect your system from this type of attack, it is necessary to build security protocols into the model from the beginning stages of the machine learning lifecycle.

Top 2020 Cyber Incidents in Automotive

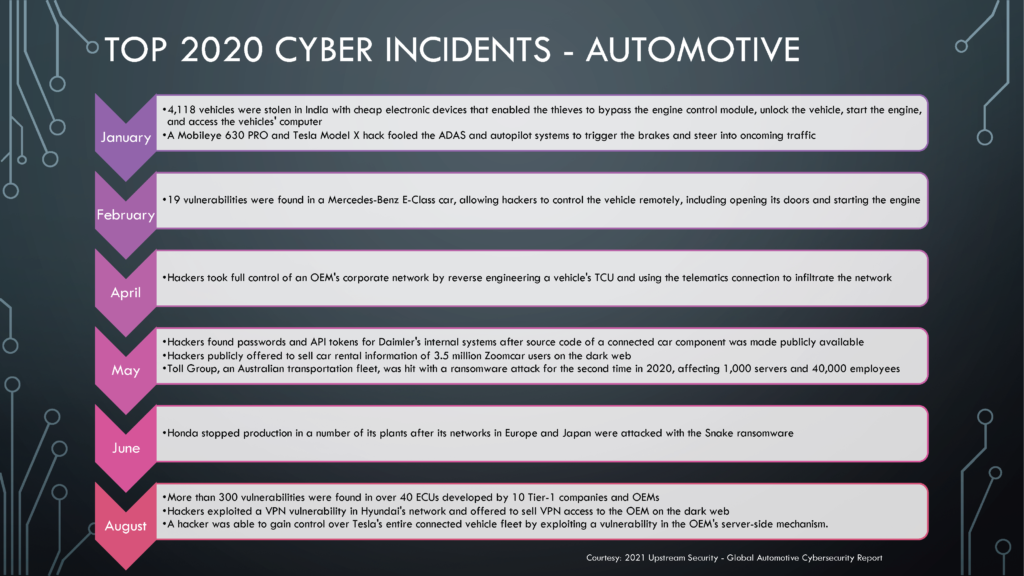

Upstream Security recently shared their 2021 Upstream Global Automotive Cybersecurity Report. [8]

In January 2020 alone, India saw more than 4000 vehicles stolen by bypassing the engine control module. The same month hackers showed how they could remotely trigger brakes and steer an autonomous car into oncoming traffic.

February of this year saw how hackers could unlock Mercedes Benz’s doors and start the engine.

Some of the attacks involve adversaries getting access to the enterprise by hacking the cars they built. It’s interesting to see how hackers look for small things to get into the network. Such items include code, API tokens, and passwords. They get into the network, steal intellectual property, and sell them on the dark web. In some attacks, the network is held hostage using ransomware until the company pays.

Sometimes adversaries may attack our third party because it is easy to get to our network through them. We may have a robust security program. However, we could be attacked if we don’t ensure our third party holds the same standards.

Most Common Attack Vectors in Automotive

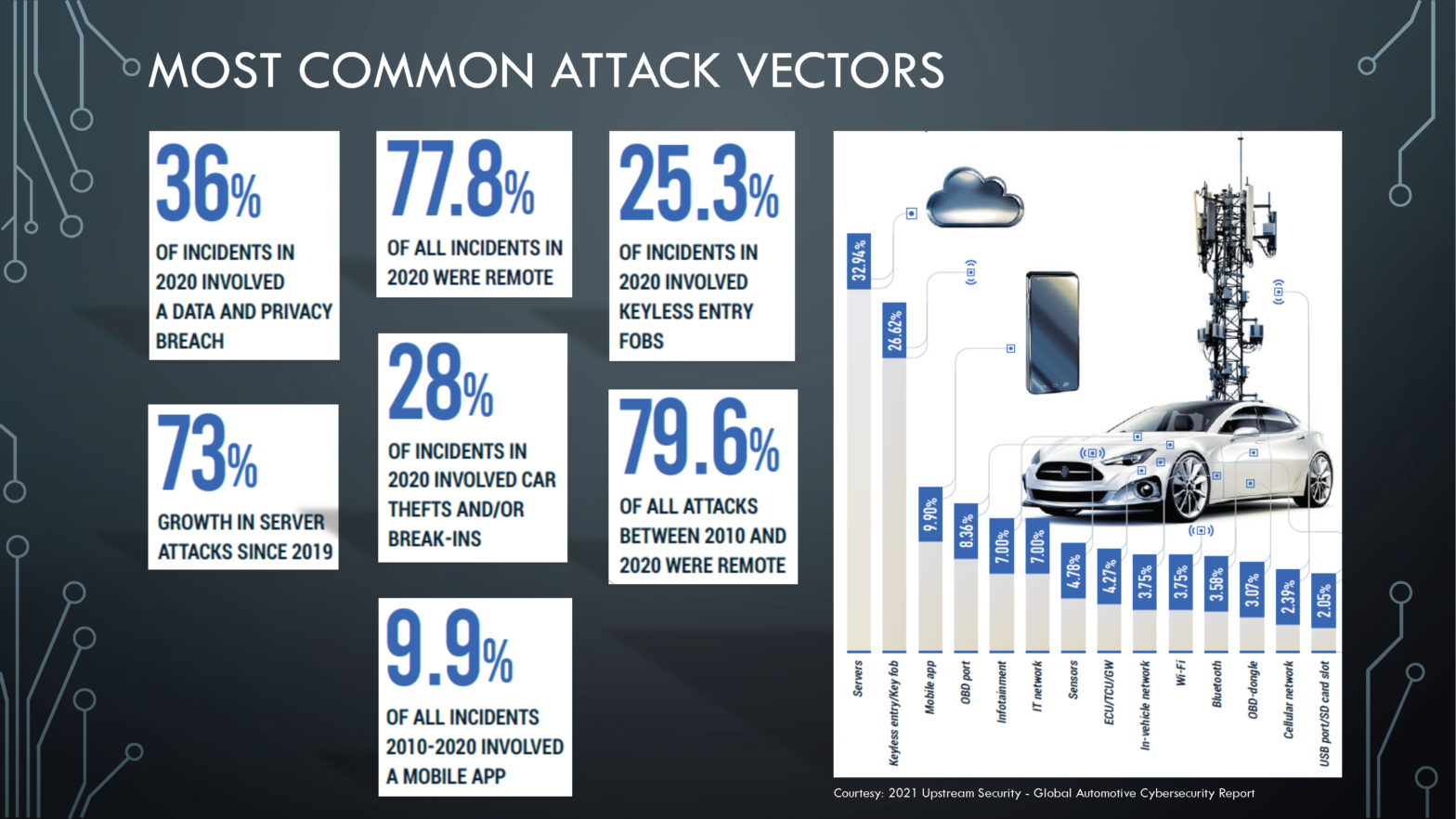

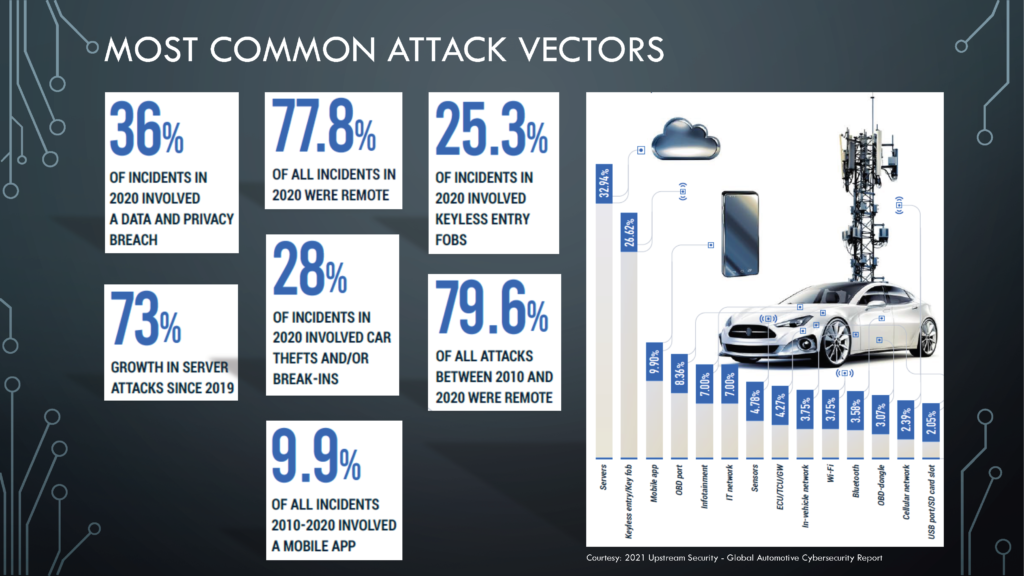

There are many ways to attack a car or its supporting systems. Adversaries could get through servers, key fobs, mobile app, infotainment system, IT network, sensors, Wi-Fi, Bluetooth, cell network, or as simple as a USB port.

77% of all incidents reported in 2020 involved remote attacks, with 25% involving a keyless entry fob and 36% involving a data privacy breach. 28% of the incidences consists of car theft or break-ins.

These are ordinary cars that we all use. When we build a platform to support ML and AI, we must make them secure and robust. According to the Upstream report, about 86% of the automobiles we would use would be “connected” by 2025. They’ll be far more sophisticated than they were when General Motors (GM) launched what could be considered the first “connected car” model in 1996 with only an emergency call system.

Each data breach has an adverse effect on the community and the economy. According to a report shared by IBM Security based on a study with the Ponemon Institute, the average cost of a data breach in 2020 is $3.86 million. [9] The US and the health care industry tops the chart. These kinds of attacks not just lead to service disruption or monetary loss but can result in injury and loss of life, as we saw in the automotive industry. In 2020, personally identifiable information (PII) was the costliest type of data breach for businesses. On average, customer PII costs $150 per lost or stolen record, Intellectual property costs $147 per record, anonymized customer data (non-PII) cost $143 per record, and employee PII costs $141 per record.

Securing a Machine Learning System

So how do we do it? To begin with, please don’t wait for an incident to happen. Also, don’t wait to fix a bug towards the end. The more we wait, the more we spend fixing the issue. According to the Ponemon study shared by IBM Security, the average time it took to identify and contain a data breach is 280 days. We may save a million dollars if we are prepared to address it in under 200 days. Moreover, if we have a fully deployed security automation, we may reduce the days by 74.

When we develop a business case, we have to make sure it is reviewed from a use case and abuse case perspective. Abuse cases are how hackers could get into the system. Build out a business flow and make sure the flow has appropriate security controls.

At the end of the design, we have to review it with our security team to find security flaws. They would help us develop a threat model showing us all possible ways to get into the system based on the set design. During the review, the team could advise us on some of the regulations we need to meet and how we can apply multi-layered security. An example of such guidance in the auto industry is the United Nations Economic Commission for Europe (UNECE) World Forum for Harmonization of Vehicle Regulations (WP.29) and ISO/SAE 21434 by SAE International and the International Organization for Standardization (ISO).

We should address the weaknesses and apply the design suggestions before we send the design to build. The end of the design phase is probably the best time to engage the Incident Response (IR) and Security Operation Center (SOC) team for their review. They could assess the design and ensure appropriate controls are in place to monitor and respond to any breach on time. The average cost of a breach goes down by $2 million if our IR team is prepared.

Software security vulnerabilities account for more than 11,000 Common Vulnerabilities and Exposures (CVE) reported in the first ten months of 2020 in NIST’s National Vulnerability Database. They may appear in the dark web way before it appears in the CVE. May 2020 saw an adversary publish the complete content of a GitLab server that belonged to Daimler. It contained detailed information on a component that simplifies access and management of live vehicle data.

When we develop the code, we should have our security team review them. The team would check the code for security vulnerabilities in the code state and when it is executed. They would verify whether the flaws in the design are addressed or not. We should review our code as well as third-party software. Two of the initial threat vectors for a malicious breach are stolen or compromised credentials and cloud misconfiguration, each 19%. Vulnerability in third-party software stands second with 16%.

By the time we have a final integrated product, we should perform a penetration test. We should do it by engaging the red teams and blue teams. Red teams are the ones that would simulate an attack, while the blue ones would ensure the system has an appropriate defense. We should perform this test as frequently as possible. Penetration test would ensure that the product stands the will of time.

Conclusion

If appropriately implemented, cybersecurity controls would significantly reduce the impact that could be caused due to vulnerable Machine Learning system and the platform it supports. In doing so, every stakeholder, including shareholders, employees, customers, suppliers, and society, benefits from it.

References

[1] Daimler, “1885–1886. The First Automobile,” 14 December 2020. [Online]. Available: https://www.daimler.com/company/tradition/company-history/1885-1886.html.

[2] Daimler, “The MBUX (Mercedes-Benz User Experience) Infotainment System: Intuitive Operating Concept With Artificial Intelligence,” 14 December 2020. [Online]. Available: https://media.daimler.com/marsMediaSite/en/instance/ko/The-MBUX-Mercedes-Benz-User-Experience-infotainment-system-Intuitive-operating-concept-with-artificial-intelligence.xhtml?oid=45018666.

[3] M. W. Van Alstyne, G. G. Parker and S. P. Chaudary, “Pipelines, Platforms, and the New Rules of Strategy,” April 2016. [Online]. Available: https://hbr.org/2016/04/pipelines-platforms-and-the-new-rules-of-strategy.

[4] Interbrand, “Interbrand Best Global Brands 2019,” 2019. [Online]. Available: https://www.interbrand.com/wp-content/uploads/2019/10/Interbrand_Best_Global_Brands_2019.pdf.

[5] Reuters, “Daimler CEO mulls JVs with Apple, Google: Magazine,” 21 August 2015. [Online]. Available: https://www.reuters.com/article/us-daimler-ceo/daimler-ceo-mulls-jvs-with-apple-google-magazine-idUSKCN0QQ18D20150821.

[6] M. W. Van Alstyne, “How Platform Businesses are Transforming Strategy,” 7 April 2016. [Online]. Available: https://hbr.org/webinar/2016/04/how-platform-businesses-are-transforming-strategy.

[7] G. McGraw, “How to Secure Machine Learning,” 19 June 2020. [Online]. Available: https://www.darkreading.com/application-security/how-to-secure-machine-learning/d/d-id/1338131.

[8] Upstream Security, “Upstream Security’s 2021 Global Automotive Cybersecurity Report,” 2020. [Online]. Available: https://upstream.auto/2021report/.

[9] IBM Security, “Cost of a Data Breach Report 2020,” April 2020. [Online]. Available: https://www.ibm.com/security/digital-assets/cost-data-breach-report/#/.